How We Zeroed on The Best Data Warehouse

12 December 2016

Thinking to automate AMI backups; Use AWS Lambda, with ELB tags

23 January 2017

The rise and rise of Node.js applications is, quite simply, phenomenal! Thanks to a JavaScript-everywhere architecture, the difference between backend and frontend is no longer as evident as it used to be. From emerging startups to large enterprises, every business is trying to leverage the lightweight and lightning quick Node.js framework to build high-performance applications across numerous use cases. For those interested to drill down a bit more, here’s an article explaining the reasons why Node.js is so popular.

Equally up to the task, or perhaps, even more evident, is the use of cloud! All these applications are largely built for the cloud, where one needs to minimize the dependency on OS and the environment to truly leverage the power of the cloud. And that’s where containerization technology comes to the fore, helping developers like you and me to break these apps down into binary and deploy them on cloud clusters!

One of the most commonly used tools to achieve containerization is, Docker. In a nutshell, Docker is a software (or a containerization platform), that provides an abstraction of OS-level virtualization. These containers contain the real operating system, the software that you build along with all dependencies to run the software in a variable environment. In this blog post, we’ll explore how to containerize a node.js application using Docker. But before that, we need to break the app into binary – and for that very purpose, we’ll use StrongLoop Process Manager.

If you’re a developer, get yourself a host-machine with Docker-Engine and Docker-Compose installed. And it goes without saying, that you need a node.js application to containerize a node.js app!

While we’re at it, let’s take a look at some of the Docker components, since we’ll be using some of them in the subsequent processes.

- Docker Daemon: used to manage docker containers on the host it runs

- Docker CLI: used to command and communicate with the docker daemon

- Docker Image Index: a repository (public or private) for docker image.

- Docker Containers: directories containing everything-your-application

- Docker Images: snapshots of containers or base OS (e.g. Ubuntu) images

- Dockerfile: scripts automating the building process of images

Next up, are some of the best practices. Trust me, although this looks simple enough, one step amiss and you’ll have to run-around quite a bit!

- Avoid installing unnecessary packages

- Run only one process per container

- Minimize the number of layers

- Sort multi-line arguments

Having checked for the best practices, here’s a quick look at some of the Dockerfile instructions we’ll use:

- FROM: Sets the base image for the subsequent instructions

- MAINTAINER: Allows to set the author field of the generated image

- RUN: Allows you to execute any command in a new layer on top of the current image

- CMD: The main purpose of CMD is to provide defaults of an existing container i.e Starting a service

- LABEL: Adds the meta data to the image

- EXPOSE: Informs docker that the container listens on specific network port at run time

- ENV: Sets the environment variable to the

- ADD & COPY: Both performs the same functionality. COPY supports only basic copying of local files into container; while ADD has some features like local only tat extraction and remote URL support

- ENTRYPOINT: Allows to configure a container that run as an executable

- VOLUME: Used to expose any data storage area on host to the docker

- WORKDIR: Working directory, instead of proliferating instruction like RUN cd.. && perform some function use WORKDIR to define working directory.

And finally, we’ve reached a point where we can install StrongLoop Process Manager and get done with the rest of the process in a breeze! So, here’s the step-by-step process you’ve been waiting for:

Installation of Strongloop process manager on docker container</h5>

- Download and run the StrongLoop Process Manager container curl -sSL https://strong-pm.io/docker.sh | sudo /bin/sh

- Verify the Docker image docker images

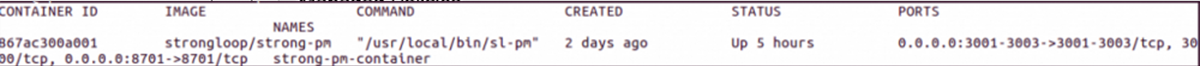

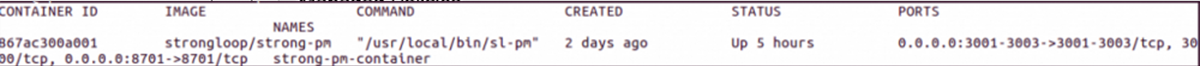

- Verify the Docker container and ports docker ps

Note: Port 8701 is the deployment port while 3001 – 3003 is the manager port.

Once the Strongloop process manager is up and running, it’s time to build the Dockerfile – this goes a long way in ensuring we don’t run multiple complex executables.</p>

Building the dockerfile

- Download code from your git repo or use code if you have it locally

- Create a text file as Dockerfile # touch dockerfile

- Edit the file in your favourite editor (We are using vi) vi dockerfile

- Paste the below content, save and exit

FROM node:4-onbuild

RUN mkdir /demo

WORKDIR /demo

COPY package.json /demo

COPY server.js /demo

RUN apt-get update && apt-get install -y nodejs npm vim

RUN npm install -g strongloop

VOLUME /home/demo/:/demo/

EXPOSE 3000CMD [“npm”, “start”]

Note:We have downloaded the code from git at /home/demo/ directory and by using VOLUME parameter we are mounting it to /demo/folder of the container.

- The dockerfile is ready. Now we’ll create docker-compose.yml to build and run the container vi docker-compose.yml Paste the below content, save and exit version: ‘2’ services: web: build: volumes: – “./home/demo/:/demo” ports: – “32769:3000”

- We have the docker-compose and docker file ready, now we will build the container: docker-compose up -d

- Verify the image ocker images

- Verify the container docker ps

- Login into the container docker exec –ti demoappcontainer /bin/bash

- Go to the /demo/ and, build and deploy the binary using strongloop process manager cd /demo/ slc build slc deploy http://remote.host:8701

- The application is now deployed on the docker container. Go to any browser and type http://remote.host:3001

Remember, since you’re using StrongLoop Process Manager container, type the container IP of the same in place of remote host. That’s all folks! We’ve successfully:

- Familiarized ourselves with the docker environment and its components

- Got StrongLoop Process Manager to break your node.js application into binary

- Got the binaries deployed on a StrongLoop Process Manager container

- Saved ourselves the hassles of building a separate node.js environment just for that single, awesome app!

The next time you come across an application built on node.js and you want to deploy it without worrying about OS environments, you know what to do! And now that we already have a container up and running, you may choose to deploy any other app, irrespective of the OS – on the same host!

Doesn’t that save you a good bit of cost and all the troubles involved in building a new environment from the scratch?

Trust us, it does! If you have any questions, we’re here to answer. Just drop us a comment and we’ll be glad to reply in double-quick time. Until then, happy containerization!

Meta Data

Meta data is data about data that describes the data warehouse. It is used for building, maintaining, managing and using the data warehouse.The role of metadata in a warehouse is different from the warehouse data, yet it plays an important role. The various roles of metadata are explained below:

- Metadata acts as a directory for data warehouse data.

- This directory helps the decision support system to locate the content correctly in datawarehouse.

- Metadata helps in decision support system for mapping of data while transformation fromoperational environment to data warehouse environment.

- Metadata also helps in the distinction between lightly detailed data and highly summarized data.

- Metadata is used by query tools to build insights.

- Metadata is used by ETL tools in cleaning, transforming and loading data.

Data Marts

Data mart is an implementation of the data warehouse with a scope of content and data warehouse functions. Usually, data mart is restricted to a single department or part of the organization.Some of the complexities inherent in data warehouses are usually not present in data-mart-oriented projects. Data architecture modeling, for instance, which is a crucial technique for data warehouse development, is far less required for data marts.Modeling for the data mart is more end-user focused than modeling for a data warehouse.The primary advantages are:

- Data Segregation: Each box of information is developed without changing the other ones. This boosts information security and the quality of data.

- Easier Access to Information: These data structures provide an easy way to interpret the information stored on the database.

- Faster Response: Since marts are derived from the adopted structure, it performs better than full data warehouse scan.

- Simple queries: Due to smaller size and volume of data, queries tend to be simpler.

Subject full detailed data: Might also provide summarization of the information - Specific to User Needs: This set of data is focused on the end user needs

Easy to Create and Maintain

Implementation Methodology

One of the key to success in building a scalable Data warehouse solution is iterative approach. Data warehouse development can easily be bogged down if scope is too broad. The simplest way to scope data warehouse development is building one data mart at a time. Each data mart supports a single organizational element and the scope of development is limited by the data mart requirements.Initial data mart usually acts as the Data warehouse proof-of- concept, the scope must be restricted and sufficient to provide immediate and real benefits. Over a period of time, additional data marts can be developed and integrated as enterprise needs and resource availability.Different phases of development are:Determine hardware and software platformsThere are certain questions to be answered before correct hardware and software platform can be identified like:

- How much data will be in Data Warehouse?

- Economic feasibility of platform.

- Scalability of platform and if it is optimized for data warehouse performance.

- Choices of OS, development software and database management systems.

Based on above information, the right set of decisions and procurement can be made.Develop source integration and data transformation platformsIntegration and transformation programs are necessary to extract information from operational systems and databases for initial and subsequent loads. There could be a separate program needed to do the initial load depending upon the data volume and complexity.Update programs are usually simpler and just pick the changed data since the last run. Over time, update programs will be changed to reflect changes in both operational and other data sources.Develop data security policies and proceduresA data warehouse system is a read-only source of enterprise information, so there is no need to be concerned of creating, update, delete capabilities. Though there is a need to address the tradeoff between protecting the corporate assets against unauthorized access and making data accessible to users who need the decision power.Security also comprises of physical security of the data warehouse. Data must be secured from loss, damage and enough redundancy and backup measures must be in place.

Design User Interfaces

Data availability is one side of the coin but how easily and effectively a user can perceive that data is what defines the usability of such a solution. To provide ease of data consumption, graphical views are preferred by most of the organizations. For performance, developers must ensure that platform supports and are optimized to cater to end-user needs. There must be an option for the user to drill down and roll up to get more detailed views as well as a view of the overall health of the organization. There is a number of tools that can be integrated with the existing data warehouse to get all such capabilities while certain specific and customized views will need manual development. Find out,How India’s fastest-growing logistics company is driving key business decisions by running real-time reporting on top of Redshift.